Max Planck Fellow Group

Data-Driven Modeling of Natural Dynamics

Prof. Dr. Alexander Ecker

The mission of the Max Planck Fellow group “Data-Driven Modeling of Natural Dynamics” is to expand machine learning in order to empower the modeling, optimization and control of complex dynamical systems in physics and biology. Machine learning (ML) has recently triggered a revolution in the fields of computer vision or natural language processing. ML has achieved remarkable progress towards solving problems previously considered exceedingly hard or even impossible with current technologies. Famous examples are playing Chess or Go. Over the coming decade, machine learning techniques are expected to transform the process of scientific discovery in the quantitative natural sciences. Our goal is to contribute at the forefront of this transformation by establishing new machine learning approaches tailored to address some of the most challenging problems in complex system research: the dynamics of clouds and atmospheric turbulence, the modeling and control of the dynamics of the heart and understanding the design and dynamics of deep neural networks in the brain.

Modeling of cloud microphysics and turbulence

We have recently started a collaboration with the Department of Fluid Physics, Pattern Formation, and Biocomplexity (Eberhard Bodenschatz) focusing on modeling atmospheric turbulence (Bodenschatz et al., 2010). We are exploring ways to enable 3d image reconstruction from holographic measurements in cases where existing numerical methods fail. Eberhard Bodenschatz is conducting in situ measurements in clouds.

One of the instruments uses inline holography originally pioneered at the Max Planck Institute for Chemistry. In inline holography the diffraction pattern of a coherent monochromatic light beam is captured with a high-speed high-resolution video camera (in the Max Planck CloudKite project of the Bodenschatz group 25MPixel at 75hz). Based on the knowledge of the beam and a scattering model, the inverse problem needs to be calculated.

In the just completed measurement campaign EUREC4A in the trade wind region of the Atlantic, 800,000 holograms of cloud particles were taken. We expect that 200,000 contain interesting data. The analysis with the current software takes 4 core hours per hologram. Analyzing 200,000 hologram therefore requires the use of a high-performance computer (we have a 4096 core gpu cluster). Our plan is to cut this time down very significantly with appropriate deep learning algorithms. Not only will this new method be utmost useful in cloud microphysics measurements, but similarly important for methods of inline holographic microscopy.

Data-driven modeling cardiac dynamics

The Biomedical Physics group (Stefan Luther) and the Cardiac Dynamics Group (Eberhard Bodenschatz) perform physical modeling of cardiac dynamics. In addition, the Luther group performs cutting edge cardiac imaging (Luther et al., 2011; Christoph et al., 2018). We plan to develop machine-learning-based predictive models of electrical turbulence in the heart during cardiac fibrillation. Modeling cardiac fibrillation is challenging, among other reasons, because every heart is different and it is constantly in motion when observing it “in action.”

We plan to build upon recent progress in self-supervised learning to learn high-level representations of both the pulsating dynamics and those of the electrical turbulence directly from imaging data without requiring sophisticated image processing to register the observations into a common frame of reference. Combining such self-supervised feature learning with transfer learning we may be able to learn an end-to-end model of low-energy stimulation. Such a differential endto- end model allows novel ways of predicting interventions (Walker et al., 2019), which may ultimately allow us to predict therapeutic interventions such as low-energy anti-fibrillation pacing (Luther et al., 2011).

Self-organization and design of deep networks for vision

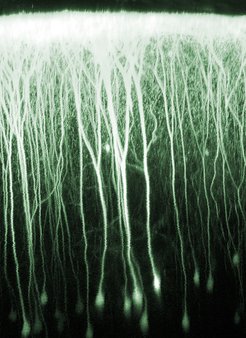

The brain is a dynamical system operating at multiple spatial and temporal scales. We hypothesize that understanding the dynamics of information processing in local cortical circuits (Ecker et al., 2010) requires understanding their contribution to behavioral performance of the entire system. We focus on area V1 – an early stage of visual information processing and the largest cortical area in the primate brain – and study its circuitry and architecture in the context of the ventral visual stream, the multistage hierarchy that supports object recognition.

Here, deep learning has enabled exciting avenues for research. Firstly, we can capitalize on recent advances in computer vision: highperforming deep neural networks trained on object recognition provide us with working models of ventral visual stream processing. Secondly, it is now for the first time feasible to obtain data-driven models of neural function (Klindt et al., 2017; Cadena et al., 2019) that accurately represent processing at many levels of the ventral stream hierarchy.

Work at the Max Planck Institute for Dynamics and Self-Organization has predicted and uncovered universal quantitative architectural principles of primate V1 architecture and pioneered their evolutionary origins (Kaschube et al., 2010). However, it remains enigmatic what advantages these principles confer on the performance of the visual system. We plan to investigate together with the Neural Systems Theory group (Viola Priesemann) and the Theoretical Neurophysics group (Fred Wolf) at MPI DS (a) whether the same quantitative laws emerge in artificial neural networks trained to optimally perform object recognition and (b) whether obeying these quantitative principles enhances the robustness or efficiency of artificial neural networks. Thus, performance-optimized models will allow us to bridge the gap between mathematical theories of neural self-organization and the behavioral goal of the system and reveal their contribution to efficient and flexible neural processing.

Expected outcomes

Expanding the scope of machine learning approaches to enable datadriven and performance-optimized models of complex physical and biological systems is a major frontier of conceptual innovation in the natural sciences. Joint work with colleagues at the MPI DS on cutting-edge problems of dynamics and self-organization in physics and biology will enable us to pioneer applications ranging from fundamental problems in atmospheric and climate dynamics to translational applications such as predicting low-energy anti-fibrillation patterns. If we are successful, our joint work will achieve end-to-end prediction of the state and the dynamics of complex physical and biological systems directly from experimental data such as images, holograms or time series, obviating the need to first extract physical quantities of interest and then running costly forward simulations, and providing us with a novel avenue to generating precise phenomenological models of the collective behavior of the entire system.

Literature

[1] E. Bodenschatz, S.P. Malinowski, R.A. Shaw, F. Stratmann, Can We Understand Clouds Without Turbulence? Science 327, 970–971 (2010).

[2] S.A. Cadena, G.H. Denfield, E.Y. Walker, L.A. Gatys, A.S. Tolias, M. Bethge, A.S. Ecker, Deep convolutional models improve predictions of macaque V1 responses to natural images. PLOS Comput Biol 15, e1006897 (2019).

[3] J. Christoph, M. Chebbok, C. Richter, J. Schröder-Schetelig, P. Bittihn, S. Stein, I. Uzelac, F.H. Fenton, G. Hasenfuß, R.F. Gilmour Jr., S. Luther, Electromechanical vortex filaments during cardiac fibrillation. Nature 555, 667–672 (2018).

[4] A.S. Ecker, P. Berens, G.A. Keliris, M. Bethge, N.K. Logothetis, A.S. Tolias, Decorrelated Neuronal Firing in Cortical Microcircuits. Science 327, 584–587 (2010).

[5] M. Kaschube, M. Schnabel, S. Löwel, D.M. Coppola, L.E. White, F. Wolf, Universality in the evolution of orientation columns in the visual cortex. Science 330, 1113–1116 (2010).

[6] D.A. Klindt, A.S. Ecker, T. Euler, M. Bethge, Neural system identification for large populations separating “what” and “where.” In: Advances in Neural Information Processing Systems (NeurIPS) Available at: http://arxiv.org/abs/1711.02653 [Accessed November 8, 2017].

[7] S. Luther, F.H. Fenton, B.G. Kornreich, A. Squires, P. Bittihn, D. Hornung, M. Zabel, J. Flanders, A. Gladuli, L. Campoy, E.M. Cherry, G. Luther, G. Hasenfuss, V.I. Krinsky, A. Pumir, R.F. Gilmour, E. Bodenschatz, Low-energy control of electrical turbulence in the heart. Nature 475, 235–239 (2011).

[8] E.Y. Walker, F.H. Sinz, E. Cobos, T. Muhammad, E. Froudarakis, P.G. Fahey, A.S. Ecker, J. Reimer, X. Pitkow, A.S. Tolias, Inception loops discover what excites neurons most using deep predictive models. Nature Neuroscience 22, 2060–2065 (2019).